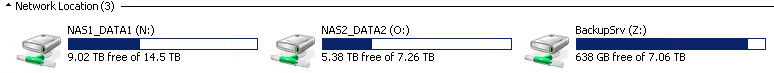

The time has come to increase storage capacity in the home lab. I expect that before the end of this year that I will have less than 1TB of free space left on my primary data NAS. That is a problem, and an expensive one at that. At the time of this writing I have 1.66TB of 7.21TB free (77% used). My data growth rate is currently between 3-5% on average per month. That gives me about 2-3 months before I’m in a critical state.

Adding storage to the primary data/media pools means also means adding storage to the backup pools. You won’t catch me without a backup – you only need to be burned by that once before you learn that harsh lesson. Seagate has come out with a 8TB drive meant for backups only which will help with backup capacity. Overall have been pretty skeptical of these 8TB drives. It is strongly advised not to use them in a RAID setup, they use SMR (shingled magnetic recording) that allows the tracks on the platter to be layered on top of each other to increase platter density or tracks per inch (TPI). With that said they seem to be fairly robust. While one could argue that I could (should?) delete some stuff, I strongly disagree. I am a data hoarder. Do you literally throw out all your books after you’re done reading them? Probably not. Same goes with data.

Upgrading the primary NAS means I’ll need to rebuild RAID arrays, use NAS 2 as “swing” storage, move data onto the upgraded NAS 1, rebuild NAS 2, and so on. This will take a couple of days of just moving data around and ensuring I have a backup at all times. During the swing process I am particularly vulnerable to drive failure. Currently my backup NAS 2 is in a JBOD configuration. If any one of the drives fail during this read/write intensive transfer process – game over. For that reason I will be making a second backup onto the 8TB seagate drive, just in case.

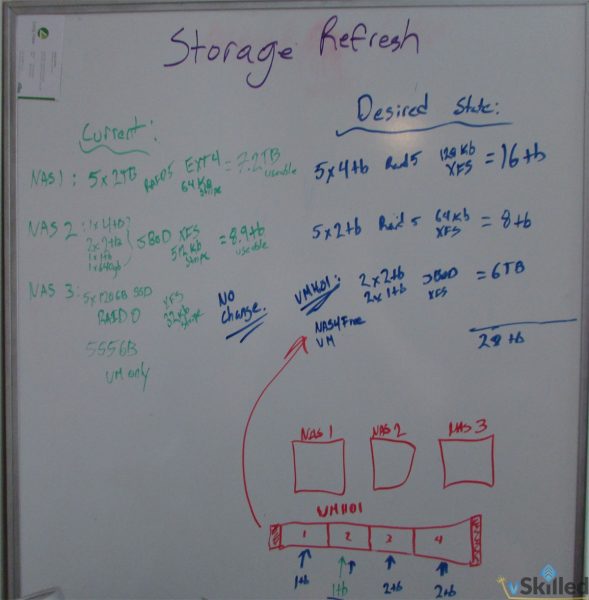

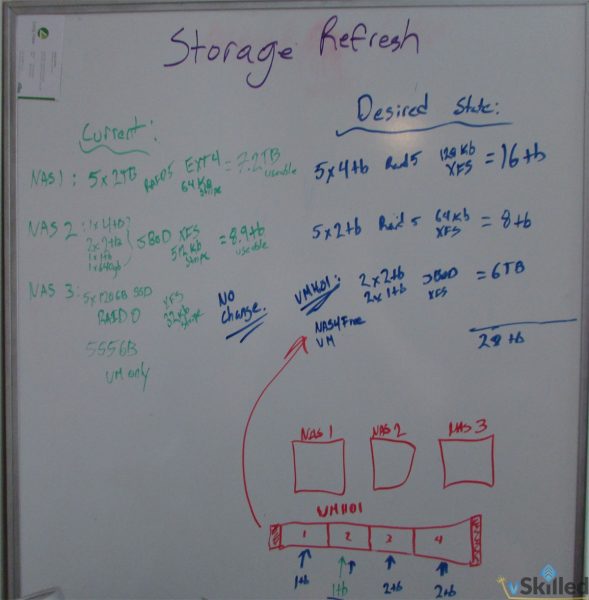

The plan is to switch NAS 1 into 5 x 4TB RAID 5, NAS 2 into what NAS 1 is currently (5 x 2TB RAID 5). I’ll then be leveraging my VMH01 (Dell C1100) for the backup pool drives (2 x 8TB, 2 x 2TB in JBOD) served up by a NAS4Free virtual machine. To help wrap my head around what I am doing I like to draw things out on my whiteboard. Here is my “draft” design. Apologies for the chicken scratch.

I’ll be re-purposing an existing 4TB drive in NAS 2 and moving it into the NAS 1 raid pool (hence why only purchasing 4 x 4TB drives as seen below). This saves me the cost of buying another 4TB drive.

I will be using a multi-vendor setup using a mix of Seagate and Western Digital drives. That will make things a little more robust in the long term. Currently I just have desktop rated drives in the primary NAS which, by manufacture guidelines, are only rated for a maximum of 2 in RAID 1/0 and they are also only rated for 8×5 use. The WD Red and Seagate NAS series drives are designed for use in home NAS and servers. They offer a good price to performance ratio, and possess a few features which make them more suitable for RAID arrays such as TLER, higher vibration tolerance (which should result in a longer lifespan), consume less power and are rated for 24/7 use.

| Drive | Quantity | Cost (CAD) |

| Seagate ST8000AS0002 8TB 5900RPM 128MB Cache SATA3 Archive Hard Drive OEM - for Backup Data Only | 2 x $319.99 ea | $319.99 |

| Western Digital WD WD40EFRX 4TB Red SATA3 6GB/S Cache 64MB 3.5in Hard Drive | 2 x $209.99 ea | $419.98 |

| Seagate ST4000VN000 4TB 64MB SATA 6GB/S 3.5IN Internal NAS Hard Drive | 2 x $199.99 ea | $399.98 |

| | $1,459.94 |

All said and done I will end up with two large data/media storage pools with 22TB~ of usable combined storage.

A considerably large increase from my existing data capacity of only 7.21TB. The idea being for this to last at least 3+ years. NAS 2 which is currently a JBOD for backups only will now be another usable RAID5 protected data pool. Each NAS backed up to VMH01’s backup storage JBOD.

| Device | Current Drive Layout | Current Capacity | Desired Drive Layout | Desired Capacity |

| NAS 1 (Thecus N5550) | 5 x 2TB (RAID 5) | 7.21TB | 5 x 4TB (RAID 5) | 16TB~ |

| NAS 2 (Thecus N5550) | 1x4TB, 2x2TB, 1x1TB, 1x640GB (JBOD) | 8.9TB | 5 x 2TB (RAID 5) | 7.25TB~ |

| VMH01 (Dell C1100) | 1 x 1TB | 1TB | 2 x 8TB, 2 x 2TB (JBOD) | 20TB~ |

| | | | |

I’ll be sure to post updates with pictures on the build and upgrade process when the time comes. For now I’ll be trying to saving up some cash to make this plan come together.