I’ve been involved in setting up some very large Windows DHCP deployments during my time working as a Consultant at Long View Systems. Along the way I’ve found some interesting challenges and caveats of using Windows DHCP, especially so anytime your working with DHCP enabled dynamic DNS updates. I wanted to have a quick post about this for my own reference and hopefully might come in handy for others as well.

- DHCP Failover Scopes

- Administration Overhead

- DhcpLogFilesMaxSize

- DynamicDNSQueueLength

- DnsRegistrationMaxRetries

DHCP Failover Scopes

I’ve covered this topic extensively in my Windows Server 2012 R2 – DHCP High Availability / Fail-over Setup Guide series. Basically, if you are deploying Windows DHCP on a 2012+ server then you should be using DHCP Failover (not to be confused with split-scope or ms-clustering).

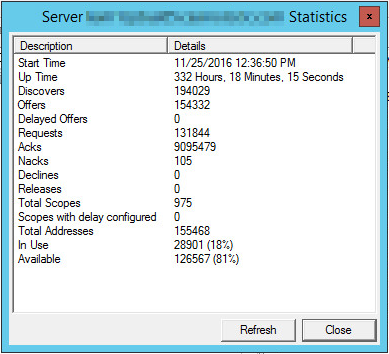

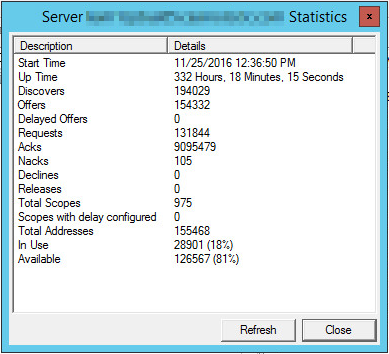

Administration Overhead

If you’re working with more than 100 scopes using only the default DHCP MMC-snap in’s, you’re gonna have a bad time.

Almost 1,000 DHCP scopes, 150k+ IP addresses

Performing administration tasks in the console with a large number of scopes becomes very repetitive and time consuming as each task normally requires many clicks. Making mass-changes is also very difficult or next to impossible. You may find yourself becoming familiar with Powershell scripting to resolve this problem. The DHCP Server Cmdlets in Windows PowerShell are very easy to use and Microsoft has great documentation on this. I found myself making Powershell scripts to make mass-changes much easier and less vulnerable to human error due to the very repetitive nature of the default GUI. Continue reading…