Home Lab

Last Updated: September 26, 2017

This is an overview of the vSkilled home lab environment. A home lab is a great learning tool for any tech savvy geek.

The lab runs 24x7x365 and uses approximately 600W~ of power concurrently. For years now my home lab has been a critical part of my home. It is quite literally the backbone that runs the infrastructure for the rest of the electronics in the house.

I am always making tweaks and changes so I will do my best to keep this page updated. Please see the last updated time above!

The vSkilled Home Lab includes the following components broken down into categories.

- Rack Hardware

- 4-Tier Black Wire Shelf

- Power / Temperature

- APC Back-UPS Pro 1000 (1000VA / 600W) x 3

- Cooling

- Industrial Floor Fan x 2

- Ceiling Intake Fan

- Security

- Foscam FI9821WBV2 HD 1280x720p H.264 Pan/Tilt IP Camera with IR-Cut Filter

- Networking

- Firewall – Custom 1U Supermicro, Intel Xeon X3470, 8GB RAM, 250GB HDD

- Netgear GS748Tv3 48-Port 1GbE Switch (CSW 1)

- Netgear GS724Tv3 24-Port 1GbE Switch (CSW 2)

- Netgear GS108T-200NAS Prosafe 8 Port 1GbE Switch (CSW 3)

- Ubiquiti Networks UAP-AC-LITE UniFi Access Point Enterprise Wi-Fi System x 2

- Compute

- Dell PowerEdge C1100 CS24-TY LFF, 2 x Xeon L5520’s, 72GB ECC RAM (VMH01)

- Custom Whitebox – Supermicro, 2 x Xeon X5570, 96GB ECC RAM (VMH02)

- NAS/SAN Storage

- Thecus N5550 5-Bay (NAS 1)

- Thecus N5550 5-Bay (NAS 2)

- Thecus N5550 5-Bay (NAS 3)

- Storage Drives (approx.)

- Seagate ST4000VN000 4TB 64MB NAS x 3

- Western Digital WD WD40EFRX 4TB Red x 2

- Western Digital Green 2TB x 4

- Seagate Desktop 1TB x 2

- Seagate Desktop 2TB x 3

- Seagate Desktop 4TB x 1

- Kingston HyperX 3K SSD 120GB x 8

- Other Gear

My virtual machines:

- AD/DNS/DHCP

- Plex

- SmarterMail

- LibreNMS

- Confluence

- phpIPAM

- Veeam

- vCenter

- MySQL

- TCAdmin

- and others

Rack Hardware

I use a 4-tier wired shelf as a rack for the vSkilled Lab. This allows me to have a more “open” design and have everything in close proximity to power, network, and compute hardware. In terms of environmental needs installing hardware in a open air, mount-less environment like this arguably does not help with proper airflow and keeping system + component temperatures down… but that’s just part of challenges of having a home lab. Power and cooling. You’ve got to work with what you’ve got.

In my current layout, I dedicate one shelf to compute infrastructure and the others to networking, storage and power gear as well as more transient hardware that stays for just the length of a product review for example. This layout allows me to keep cabling routing neatly organized.

Everything on the rack is connected to one of the two uninterruptible power supplies (UPS) in the event of a power failure. The lab lasts approximately 15-20 min on battery power before it will run dry. The security camera records all motion activity in the room. It saves video directly to the NAS servers, and stores a picture every 2 seconds to a local SD card (just in case the NAS is down for whatever reason).

On the top-left portion of the top rack there is a smoke detector (white round thing). Right beside the door is a fire extinguisher. You know… Just in case. I would hate to burn down the building, or cause injury because of my home lab. Safety first!

- The Rack

- Netgear GS748Tv3 48-Port 1GbE Switch

- Fire extinguisher close by

- Red LEDs

- Green LEDs

- Blue LEDs

Video tour of the lab – in “disco” mode! This is really for demonstration. I normally don’t have it cycling through colors like this because it’s a bit too much like having a nightclub in the house. I normally have it solid blue. 🙂

Network

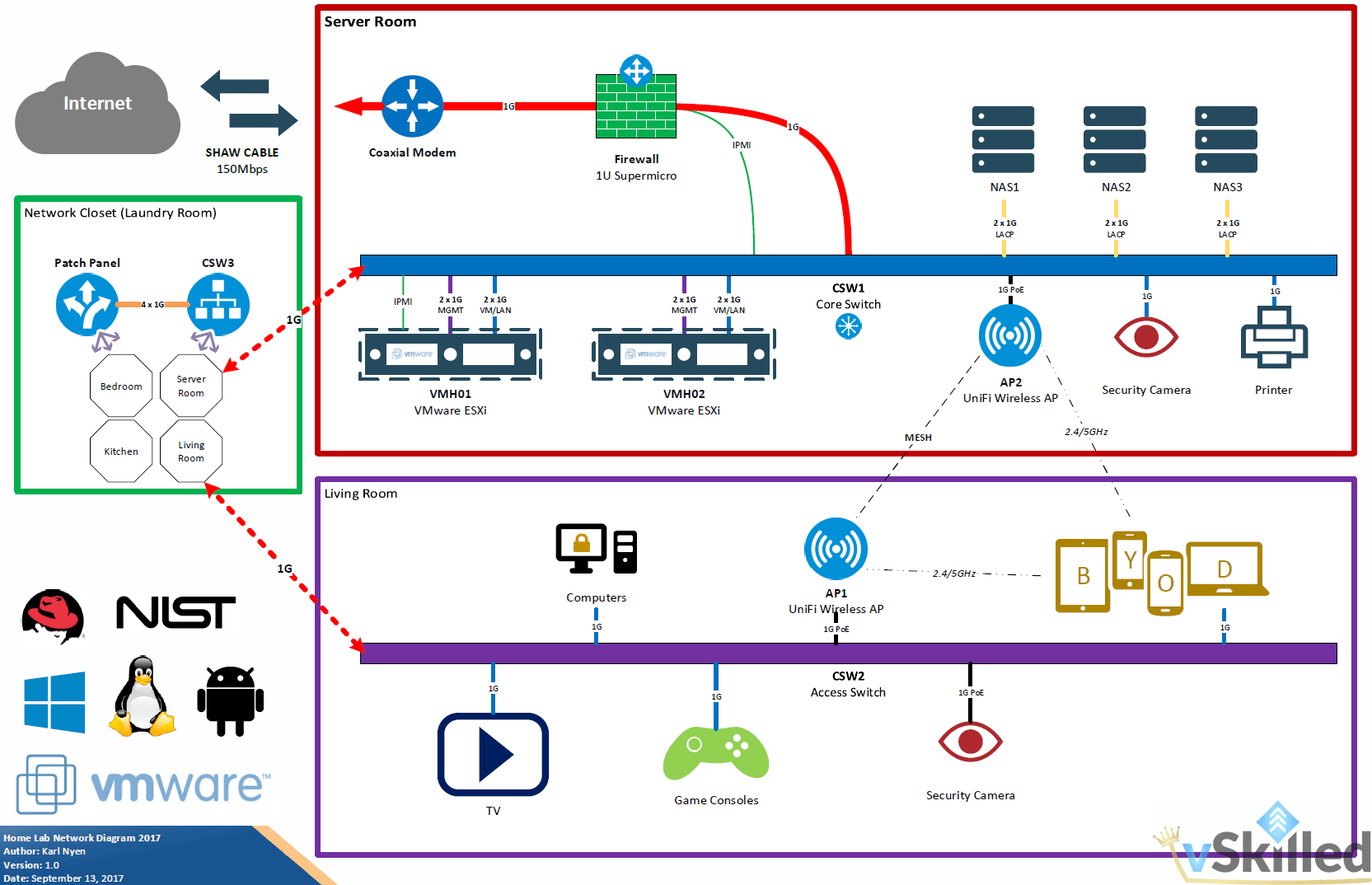

Network: Overview

I try to keep my network as clean and simple as possible. I currently use no VLANs in my network except on VMware vSwitches for isolated testing. I plan to add VLAN’s later on but I just haven’t got around to that yet.

I use Untangle as my firewall and router. The firewall runs on a Supermicro 1U server.

Each VM host has 2 x MGMT and 2 x VM traffic up-links. In the real world these connections would be split over two stacked switches for proper redundancy but I only have one switch. This allows me to have link redundancy on both the management and VM traffic link adapters.

Network: CSW1 (Core Switch 1)

The core / aggregate layer of my network stands on a very reliable Netgear GS748Tv3 48-Port 1GbE Switch. Almost all network and storage traffic will route through CSW1. On average CSW1 moves about 15,000GB+ of data around per month. The stock fans in this switch are very loud, so I have swapped out the fans to be much quieter while still giving adequate airflow.

- Netgear GS748Tv3 48-Port 1GbE Switch

Network: CSW2 (Core Switch 2)

The living room’s primary connectivity hub. Lots of room for expansion here. Mainly connects my primary desktop PC, the wireless router, game consoles, the TV and my Media PC. This switch is fan-less.

- Netgear GS724Tv3 24-Port

Network: CSW3 (Core Switch 3)

My apartment has CAT5e run in the walls to various rooms. That means that my server room essentially just needs to plug into the CAT5e wall socket for connectivity to the rest of the house. That is where CSW3 comes in. It’s located in the laundry room in a small network closet. This is also where the ISP coaxial cable feed comes in too. CSW3 is a rather robust Netgear GS108T-200NAS Prosafe 8 Port 1GbE Switch. It is basically the interconnect between the server room and the rest of the house. Home devices, wireless clients, and everything else from other rooms of the house will need to pass through here.

- Netgear GS108T-200NAS

- Patch panel

Network: Wireless

Fo wireless communication I use Ubiquiti Networks UAP-AC-LITE access points configured in a mesh. For cooling and stability it has it’s own dedicated Thermaltake cooling pad complete with blue LED’s! (because why not right?!)

Compute / VM Hosts

The virtual machine hosts are what power all my virtual machines, which at this point is 95% of my servers besides networking and hosts. They are running VMware ESXi and boot from a micro-USB flash drive. I am using compatible Intel CPU’s so that I can have VMware EVC mode running for vMotion optimizations. VMH01 is a dual socket CPU configuration and is loaded up with 72GB of RAM. VMH02 is a Supermicro white-box server I built myself, also with a dual socket CPU configuration.

- VMH01: Dell PowerEdge C1100 CS24-TY LFF

- CPU: 2 x Intel L5520 @ 2.27GHz

- RAM: 72GB DDR3 ECC

- 7 Network Ports

- 2 x Intel 82576 1Gb Ethernet (2 Ports)

- 4 x Intel I340-T4 1Gb Ethernet (4 Ports)

- 1 x iLO Management @ 100Mbps

- VMH02: Supermicro X8DT3/X8DTi Whitebox

- CPU: 2 x Intel Xeon X5570 @ 2.93GHz

- RAM: 96GB DDR3 ECC

- 7 x Network Ports

- 1 x Intel 82576 Dual-Port Gigabit (2 Ports)

- 1 x Intel I340-T4 1Gb Ethernet (4 Ports)

- 1 x IPMI Management @ 100Mbps

- VMH02 Inside 3

- Rear

- Front 2

- Front

- VMH02 Inside 2

- 750W Corsair

- VMH02 Inside

Storage

I currently exclusively use 3 x Thecus N5550 storage appliances for my storage needs. I started off with one and have been so impressed by them that I just continued to buy them. The Thecus N5550 is in my opinion the most cost effective 5-bay NAS devices on the market today. No other 5-bay NAS comes close to this price point. They are extremely reliable, have great performance, and use very little power. I would have prefered to go the Synology route, but I just couldn’t justify their premium price point.

Each of the storage appliances are connected to the CSW1 core switch using 2 x 1Gbps copper links in a LACP LAG group. This essentially combines the two links for added throughput bandwidth (up to 2Gbps!) and adds link redundancy. All storage network cables are yellow for easy visual identification.

- NAS 1 – 14.54TB – RAID5, 5 x 4TB HDD. Used for primary file storage. RAM upgrade to 4GB.

- NAS 2 – 9.08TB – RAID0, 5 x 2TB HDD. Used for backup/alt storage. Great IOPS for running a few VMs, etc.

- NAS 3 – 437GB – RAID5, 5 x 120GB SSD. High performance NFS storage used only for VM storage. RAM upgrade to 4GB.

- Systems back online. Now color coded based on power feed!

- NAS1

- Kitty Approved!

Power & Cooling

Power and cooling is arguably the biggest obstacle with having a larger home lab environment, besides licensing. Virtualization however has vastly helped with this problem. The less pieces of physical equipment you need to have turned on, using power and generating heat, the better! I used to have my firewall as a physical box but switched over to a VM to save power and reduce the heat output.

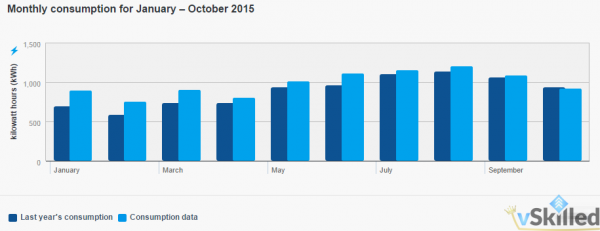

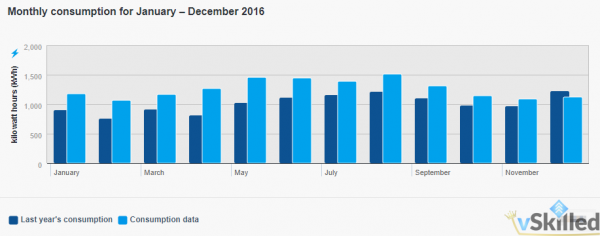

During the summer months my lab keeps the air conditioner working at maximum capacity and during the winter months the home lab helps to keep the house a nice cozy warm temperature. Obviously this translates into higher power usage during the summer months, but not by too much surprisingly – only about a 100kWh difference.

- Power Usage 2015

- Power Usage 2016

My home lab uses approximately 600W~ of power concurrently on average. This fluctuates based largely on server load. At peak load it runs at more like 650-800W+. I am lucky to live in western Canada where power is relatively cheap compared to other places in the world. I pay $0.0797 per kWh for first 1,350 kWh then $0.1195 per kWh over the 1,350 Step 1 threshold + $0.1764 per day. I get charged at mostly step 2 pricing, obviously. With all that said I pay about $220 – $290 CAD per 60-day billing period. So in the end about $150+ CAD per month which is pretty good all things considered!

Cooling is another problem I struggle with. In my currently setup I do not have a window available to hookup a portable AC unit. Luckily there is a ceiling vent fan that sucks air from the room and vents it outside but it’s contribution to keeping the room cool is trivial at best. I have looked into something like a swamp cooler but decided that was a bad idea. Swamp coolers use a evaporative cooling technique which requires water. You would just end up shooting moist + humid air into an area sensitive to moisture (which also could be disastrous for server equipment) and having to refill a water tank every couple of hours. No thanks!

My only option for cooling at this point is fans. They push and circulate the air out of the room. The door to the server room is cracked open slightly to allow cool air from the house to flow in, and the fan pushes the hot exhaust from the servers out.

Have a comment or question? Leave a comment below!

Changelog

2017-09-26:

- Many changes. See Home Lab Rebuild.

- New firewall (Custom 1U Supermicro)

- Re-designed virtual switching, and overall network cleanup

- Added one additional UPS backup power unit

- Gear is now color coded based on UPS power feed (red, yellow, green)

2016-07-28:

- Added a new Ubiquiti Networks security camera

- Added two new Ubiquiti Networks UAP-AC-LITE

- VMH02: 96GB ECC RAM upgrade

04/28/2016:

- Updates, Editing, Formatting, and Clean-up

04/18/2016:

- NAS4 died due to LSI 9260-8i catastrophic failure. HDDs moved into NAS2. CPU/Mobo/RAM swapped to MediaPC. Other hardware will be re-used in another project.

- NAS2 was JBOD w/ mixed disks. NAS2 is now RAID0 w/ 5x2TB HDD (WD Green and Seagate Barracuda), for non-redundant backup storage.

02/04/2016:

- Updated network diagram

- Updated network overview

01/08/2016:

- Updated power usage chart, includes 2014-2015 usage

12/19/2015:

- Added lots of new pictures (and replaced some old pics)

- Added NAS 4

- Updated VMH02

10/30/2015:

- Now using a VDS instead of VSS

- VMH02 replaced with newly built Supermicro Whitebox. Pictures coming soon once build is finished.

- NAS1 upgraded to 5x4TB RAID5, details updated.

- NAS2 upgraded to 5x2TB RAID5, details updated.

- Updated Power monthly consumption graph for January – October 2015

Pingback: vSkilled.com – Virtual Firewall and Networking – Planning Guide

Noaman Khan

Karl,

Will you be kind enough to tell what MB did you end up using for “VMH02 Supermicro X8DT3/X8DTi Whitebox”

Karl Nyen

It’s right in the name, the motherboard is the Supermicro X8DT3/X8DTi. 😀

Noaman Khan

Karl, Firstly thank you for clarifying motherboard model. Secondly I’m confused as per your other post, where you mention replacement of VMH02 with I7 chip and 1366 X86 chipset motherboard. As this post got updated in 2016.

I’m looking to build ESXi server that will need to run 8 VM’s 24/7 with total of 12-13 VCPU allocated. Any thoughts?

Karl Nyen

That’s an old post now. The LGA 1366 / i7 board at the time of this writing is used for my MediaPC and VMH02 is the VMware host.

You could probably get away with any single or dual socket configuration with a half decent CPU. Really depends on your VM load and your back-end storage capabilities.

Homelabber

Hi Karl, how do you split out your WAN connection from the cable modem to both ESXi hosts without VLANS?

Karl Nyen

Sorry for the delay. Each host has a private vSwitch dedicated to the “WAN” uplink NIC. The vSwitch hosts only the “WAN uplink” VM portgroup which is only assigned to firewall VM. This allows me run my firewall VM from any of my ESXi hosts without interruption what-so-ever. I can even live migrate (vMotion) the firewall between hosts while streaming Netflix (for example) without one dropped ping.

Homelabber

So the physical WAN link out of your cable modem goes into a switch and from there connects to both hosts? Is it on a VLAN or is the switch just a small ‘dumb’ unmanaged switch? It doesn’t seem to be any of the three switches you detail, unless I missed something.

Thanks!

Karl Nyen

The ISP’s modem is in bridged mode so that it’s no longer functioning as a router/gateway. Each ESXi host is physically connected directly to the modem (to vSwitch1) for the firewall WAN uplink. There is no physical switch between the modem and the hosts, besides the vSwitch. I could probably use a switch and/or VLANs in the future if I start to run out of ports from adding more hosts.

David

Awesome, Look forward to it! I have a somewhat similar home lab, all physical and it “works” for home lab, but Im way over my head on getting all the networking done “right”

David

Do you have the nitty gritty l ‘HowTo” of setting up your ESXI Hosts networking, the physical networking, storage subnets etc? Just wondering, as you have an awesome physical set up and it look so well organized.

Karl Nyen

Hi David. I currently don’t have a in-depth guide, but I do plan on making one as a blog post at some point. I am constantly changing and tweaking things in my lab so it’s hard to put this type of detailed info on this page since it would constantly be out-dated. 🙂

Amon

BSeeing this kind of homelab instigates me to build my own. Now im planning to build my own nas, but i could not find a suitable cabinet to build on.

I saw you find a computer case with plenty of space to host 8 disks. Could you provide us with model and its brand?

Congratulations and thans for your lab.

Karl Nyen

I used the Fractal Design Define R4 case for the 8-bay custom build. There are many interesting 8×3.5″ cases to choose from however.

Pingback: Home Labs: Remote Access and Security « vSkilled.com

Jack

Hi,

That was a good read. A lot of compute and a lot of storage. What most impressed me is your professional approach to your home lab.

I don’t have nearly the same amount of equipment as you do, but I have also taken a professional approach to both documenting and setting up the logical and physical parts of the lab. It’s one of the parts that I think most homelab owners neglect. Networking is in my opinion the part most homelab owners struggle with and i believe this has to do with over complicating the problem and a lack of documentation.

So just wanted to give you a thumbs up on a job well done :).