Today we will be talking about the VMware vCenter 6.7-U1 (Update 1) upgrade process. I recently had an opportunity to work with a enterprise customer to upgrade their VMware environment. In this post we will be going through the upgrade process and my thoughts. VMware 6.7 U1 is a major upgrade that includes the fully featured HTML5 client. For full details on what’s new please see: https://blogs.vmware.com/vsphere/2018/10/whats-new-in-vcenter-server-6-7-update-1.html

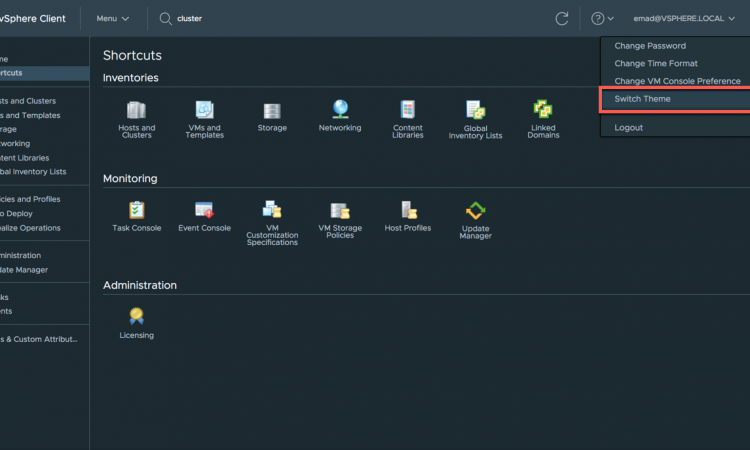

I will start by saying bravo to the VMware team for this release. For the first time I actually felt comfortable abandoning the good ol’ “fat client” (the legacy C# client). Many of VMware’s customers, in my experience, were intentionally lagging behind on older versions of vCenter to keep a cold death-grip on the fat client because they refused to be force-fed the flash client that we all know and despise. The HTML5 client is a worthy successor. It’s fast, it looks good, its organized better, and it even has a dark mode. It’s obvious they took feedback from the community, hired the right developers who understood their target audience, and put out a great product. The upgrade and migration process is also done very well.

After a few weeks of the VCSA and HTML5 client baked into the client environment it’s obvious that some things are still missing, like exporting events, from the HTML5 client but I would expect these to be eventually added. There also appears to be some lag to the recent tasks list in larger linked environments. I’ve also seen a few UI bugs with adding permissions and modifying sDRS configuration.

One issue I’ve seen on multiple VCSA’s so far is that the database “archive” (disk 13) will constantly fill up causing the VCSA to show up as degraded within the dashboard. You will be greeted with the error message “File system /storage/archive is low on storage space. Increase the size of disk /storage/archive.” There is very little documentation on this but apparently this is expected behavior despite the warnings and rational I don’t quite understand yet. This didn’t stop me from increasing the disk size (KB2126276) slightly. [2019-04-12: This issue is now fixed by VMware.]